La musicologie numérique : outils, pratiques et nouveaux paradigmes

- On 13 décembre 2025

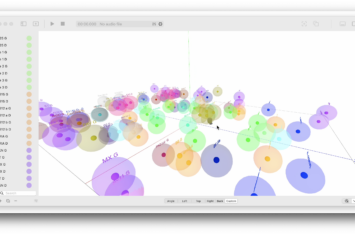

Conférence donnée dans le cadre du séminaire en humanités numériques à l’Université Evry Paris-Saclay. Lundi 15 décembre 2025, 14h30, amphi 100 Bâtiment Maupertuis. Cette présentation propose une introduction à la musicologie numérique en examinant comment les outils et méthodes issus des technologies audionumériques transforment profondément les pratiques de recherche et les cadres épistémologiques de la […]

Read More